Understanding Theory and Application of 3D

Safa Radwan

The

applications of 3D

3D animation is used in many mediums,

including training simulators for pilots and surgeons, and to create 3D maps

for engineers, but it is most commonly used in movies/animation (e.g. Disney,

Pixar, Dreamworks), and video games (Blizzard, From Software, many triple A

games use 3D animation).

Both animation and games had started out

using 2D animation, but, as years went on, these big companies favoured 3D

animation over 2D.

This is because companies could do more

with 3D animation.

(a comparison of

an old 2D Mario, compared to a newer one in 3D, both produced by Nintendo)

For example, Nintendo had moved Mario

games from 2D to 3D (pictured above is a Mario game in 2D, and the right image is

one of Mario’s first few 3-dimensional Mario games). The transition from 2D

Mario to 3D Mario was successful, because it allowed players to move more than

just left and right. It also opened up more options to play the game, and

became more immersive due to the 3D platform that allows you to interact more

in the environment, and see from multiple viewpoints.

However, Sega’s Sonic the Hedgehog games

transition from 2D into 3D were largely considered a failure, mainly because

the gameplay did not translate well into a 3D platform.

It wasn’t just games that started to

become 3D – movies started to transition from 2D to 3D…

(Inside Out movie

- 2D fan art vs 3D official art)

(Inside Out – the end of the movie, the

daughter returns back home after running away)

3D is also more appealing to the general

audience, because it can be used for more mature themes, unlike cartoons which

are generally linked with children, 3D animation can reach a wider demographic.

For example, the movie Inside Out

wouldn’t be as effective as a 2D movie, because it is easier to relate to 3D

characters than 2D, and the 3D animation allows them to animate scenes within

the movie, with some being emotional and other times funny, unlike 2D which is

usually only comedic.

API

(application programming interface)

This is the link between the hardware,

and what the person typing. Using API can reduce the time it takes to do a job,

and make it possible for programs to work with other apps (like using google as

an add on, an API is used to read the data and translate it into text).

Some examples of API are DirectX12,

Vulkan, and OpenGL.

o

DirectX

DirectX or Direct3D, is a line of API

programs, developed by Windows, with DirectX12 being the latest version produced.

DirectX is only used on the Microsoft platform (including other Microsoft

hardware like Windows and Xbox), so it isn’t versatile, or available online to

use, unlike other API programs, such as Vulkan and OpenGL. This is because

Microsoft are pushing creators to only use their platform, which reduces the

accessibility.

o

Vulkan

Vulkan is more specific than DirectX12,

as it is specifically used by graphics cards for gaming, and also other 3D

mediums like movies and animation. Vulkan enables more programmers to develop on

different platforms, as it supports a variety of hardware, including Microsoft

Windows, Linux, OSX, and android.

o

OpenGL (Open Graphics Library)

This API software runs on many different

platforms, for example Windows, MacOS, Linux, and portable platforms like

iphone, ipad. Tweaked versions of OpenGL also run on the Playstation

3D

Graphics Pipeline

A graphics

pipeline refers to the process of different stages that is used to turn the

instructions on a computer into graphics on a screen. The different

stages are; Input assembler, Vertex shader, Hull shader, Tessellator, Domain

Shader, Geometry Shader, Rasterizer, Pixel Shader and Output Merger. I will be

going through each of these stages and their purpose.

(Simplified diagram of the data flow in a Direct3D graphical

pipeline)

Microsoft.

(2018). Graphics Pipeline. Available:

https://msdn.microsoft.com/en-us/library/windows/desktop/ff476882(v=vs.85).aspx.

o

Input Assembler

This is the first stage in

the graphics pipeline. Geometry is built so it can be rendered out in a later

stage. This can be thought of as the building block stage – it’s the

foundation.

o

Vertex shader

In the second stage,

code is run on the vertices, this usually means applying shaders to the

vertices from the assembler stage.

o

Hull

shader, Tessellator, Domain Shader

These cover the third, fourth, and fifth stage, and are

optional. What happens here is hardware tessellation – this is when increases or decreases the level of detail by

adding or removing faces on the original geometry

o

Geometry Shader

This stage is also

optional. In this stage, geometry shaders operate on entire shapes unlike the

vertex shader. Here geometry can be created or destroyed, depending on the

effect the developer is trying to create. In this stage, we can generate

particles used to create effects such as rain or explosions.

o

Rasterizer

In this stage, the

rasterizer, determines what pixels we can see. This is done through clipping

and culling geometry. Culling is when there are objects that do not fall within

the view frustum (what the player can see on the screen), are discarded.

Clipping is when there’s any objects that lie within the view frustum is

clipped, and then reshaped with new triangles.

o

Pixel

Shader

Geometry is taken from the previous stages, and the pixels are

shaded.

o

Output

Merger

This is the final stage. Everything from the previous stages in

the graphics pipeline come together now, and the final image is built, using

the previous stages’ data.

In the graphics pipeline, different types

of rendering techniques are used, some examples include:

·

Scanline/rasterization – this type of rendering

is used by developers who are under time constraint, because it can be used to

render graphics quickly. It does this by rendering and image on a polygon by

polygon basis, instead of rendering an image pixel by pixel. Most games using

this technique can achieve speeds of 60 frames per second due to the low poly

count

·

Raytracing – this rendering technique takes a lot

more time, because every pixel in the scene is rendered and traced through the

camera to the nearest 3D object. Using this can create photorealistic graphics,

however this takes time, and not all hardware are capable of running it in the

highest quality.

Rendering software used:

·

Mental Ray – this software is packaged with Maya,

and is arguably the most versatile – it is relatively fast, and competent for

rendering most objects. This software uses raytracing and radiosity techniques.

·

V-Ray – this software is typically used in

conjunction with 3DS Max to render environments and architecture.

The

Geometric theory & Mesh construction

This is used for 3D modelling. The most

common used are;

NURBS surfaces – (Non-uniform rational basis spline) These are used

when modelling for engineering and automotive design, because these models are

very accurate.

Polygonal models – These models are more

commonly used in the industry, and are made up of many separate polygons

combined into a polygon mesh.

The

mesh is what defines the shape of these models, and are a collection of vertices, edges, and faces.

·

Vertices are the points in the mesh. Each vertex

has its own x, y and z coordinate, and can be moved around to distort and shape

the model, an example in which this technique is commonly used in is box modelling.

·

Edges are joined in-between vertices, and create

the wireframe.

·

Faces fill up the space inside the vertices and

edges. In a mesh the number of faces are named the poly-count, and the polygon

density is referred to as the resolution.

The example below shows how vertices,

edges and faces come together on a polygon;

The Cartesian coordinate

system, created by Rene Descartes,

is a coordinate system that depicts each point. This is used to define

3-dimensional shapes on an X, Y, and Z axis.

(Illustration

of a Cartesian coordinate plane)

3D

Development Software

Different types of software are used to

create 3D shapes, games, and animations. One popular example is Unreal Engine.

This games software has been used to create phenomenal 3D games, but had also

been used to create short movies, training simulations, visualisations, films

and even TV shows.

(Pictured below is Unreal Engine being

used to create and render the background in the green screen on the TV show ‘Lazy

Town’.)

However Unreal engine used C++ coding

which is difficult to learn for beginners, and users must pay a royalty fee if

it is used to create a product that can be bought or sold.

Another software commonly used in 3D

modelling and development is Unity – this software can be used to create games

in 2D and 3D. Unity is free to use, but the professional version can be bought

for $75 a month. Coding scripts used in Unity are C#, Unity script,

and Boo. Unity is a good games engine for beginners, because it is free to use,

uses C# which is beginner-friendly,

versatile, and has a large quantity of assets and plugins available online for

free, to help create your first game.

While Unreal Engine and

Unity can also be used to create 3D models as well as games, Maya is a 3D

software that is only able to create backgrounds, models, animation, and

effects. However, it can be more useful for game developers to create 3D

visuals, environments, characters and animation for their game in Maya, then

export it into the game. This is because Maya has a lot more options to create

3D models, and can also be used to create pre-rendered cut scenes, in games,

movies and animation.

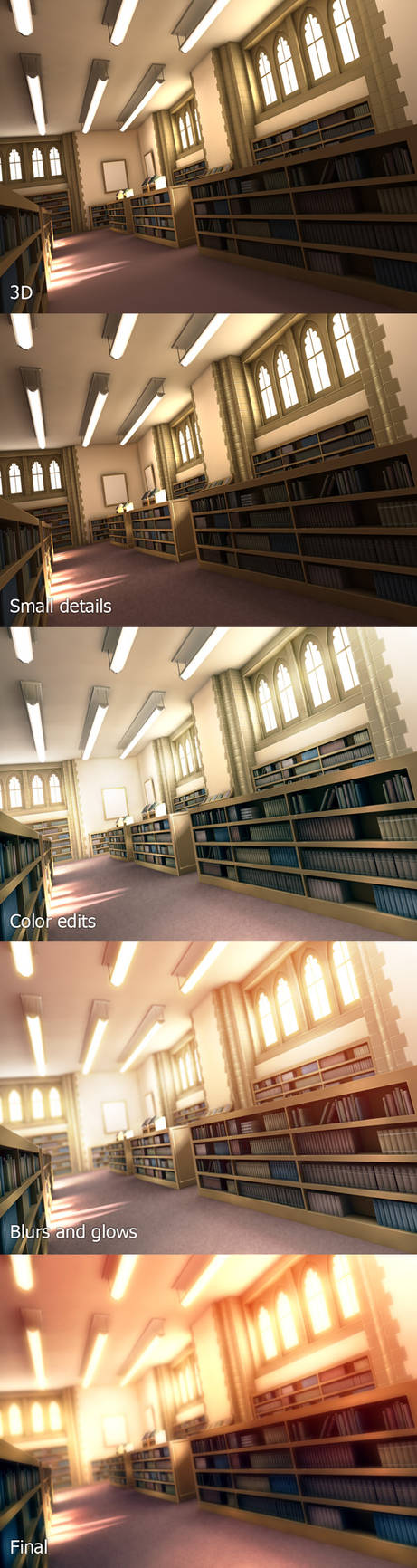

(created

by Mike Inel, using Maya. This was exported and used as a background for a game.)

(created

by Mike Inel, using Maya. This was exported and used as a background for a game.)

To convert files, you need to know how to

save it properly, for example if you want to edit an .SAI file in Maya, you

need to save it as a .PSD, .PNG, .JPG, and then open it in Maya. Maya uses the

format .MB to save work. A plugin called FBX converter can help convert files

such as; .DXF, .3DS and .OBJ into Maya and vice versa.

A few other plugins for Maya include;

DOSCH Textures

This free plugin includes different

ready-made textures for Maya, like grass and road surfaces. These textures can

be used for animations, computer graphics, and any general usage.

FaceConnect

This plugin for Maya is useful for

animators, because it allows them to render 3D face models more accurately, as

it uses motion capture to work with Maya when creating face rigs. FaceConnect

uses includes gadgets such as the Lips Pucker that helps had expression in the

corner of the lips.

This plugin for Maya is useful for

animators, because it allows them to render 3D face models more accurately, as

it uses motion capture to work with Maya when creating face rigs. FaceConnect

uses includes gadgets such as the Lips Pucker that helps had expression in the

corner of the lips.

It’s useful because the basic Maya does

not have these kinds of applications to help with animating the face, or extra

textures, so these plugins can be very helpful for beginners.

Constraints

Constraints are important for the artist

to consider when creating 3D models and environments. Types of constraints to consider

include the file size, render time, and polygon count.

Polygon count.

This is because if an artist creates a

high poly 3D environment for a mobile game, it would be difficult to render and

slow down the game, so the artist must consider what platform the game or movie

will be on before creating the 3D model, so that the 3D model runs smoothly but

also has the best possible graphics for the hardware.

Old consoles and hardware did not have

high processing power, so they could not render a high number of polygons. This

meant when the artists were creating the 3D medium, they were very limited, and

could not use as much polygons as we could today, meaning the models could not

look very realistic

However today most of our consoles have

evolved to be able to process a much higher count of polys than 5 or 10 years

ago. The PS4 was calculated to have 43 times the processing power of the PS2.

(image above depicts the original Shadow

of the Colossus that came out on the PS2 in 2005 on the left, and the remake

with updated graphics and visuals releasing in 2018 February on the PS4)

Render time.

Having a high poly count will also

increase the time it takes to render the project. A high poly count will need a

high-quality CPU (Central processing unit) to render 3D models and environments.

The better the CPU the more polygons it

can handle and render at once, however this type of equipment can be very

expensive and not everyone can afford it. Overtime our hardware have become

more powerful and are more capable of rendering millions of polygons, however

these systems still have constraints on how many polygons are in one scene -

too many can cause the game to lag and drop below 30 frames per second.

File size.

This is important because in an industry,

there are usually many different people working together on one project,

including animators, concept artists, and coders who need to share files. These

files are shared over through different people and computers, so the size of

the file needs to be kept efficient to make sure that they fit onto the media

they are designed for.

Overall 3D animation has become a vital

part in this current world. It has overtaken 2D games, movies and other mediums.

It allows us to create amazing and realistic renditions of not just the real

world, but of mythical creatures and other worlds.

(Resources/references used:

https://www.youtube.com/watch?v=KjCudOaZ7P4

Game Engine Architecture (.pdf file)

Unreal Engine 4 for Design Visualization

(e-book)

https://www.3dtotal.com/interview/716-top-10-plugins-for-maya-by-paul-hatton

No comments:

Post a Comment